AI Detectors Are Bollocks: Why I Don't Give a Toss What They Think

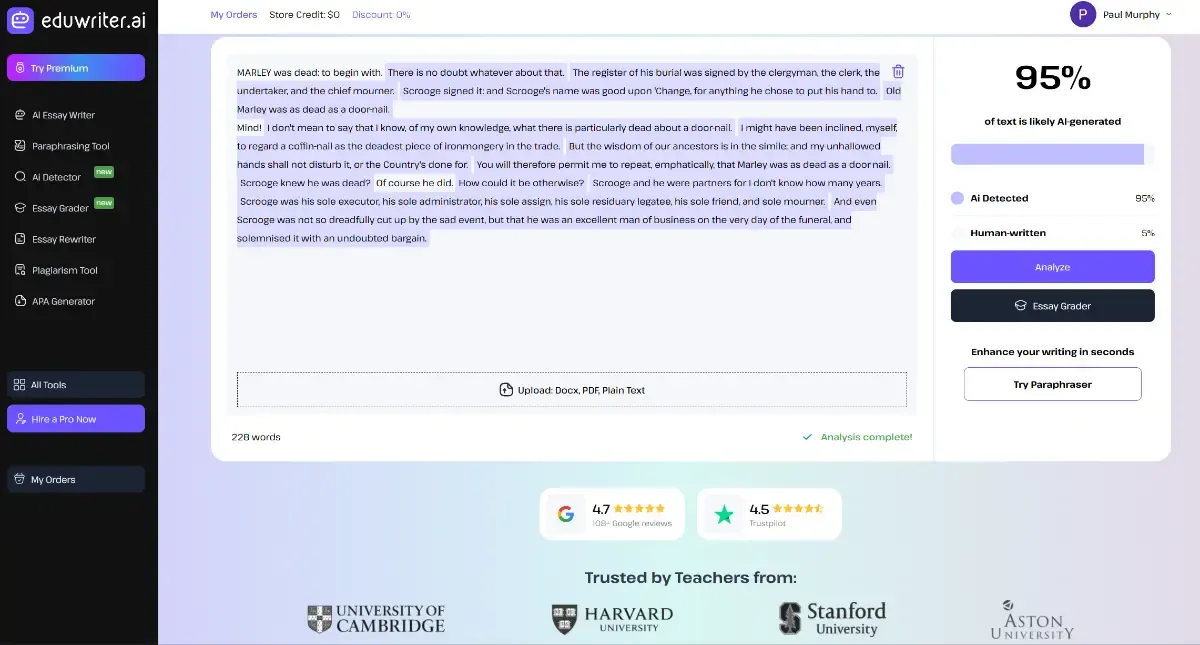

Eduwriter.ai, 'trusted by Cambridge, Stanford, and Harvard,' scores Charles Dickens' A Christmas Carol (1843) as 95% AI-generated.

I ran a passage from Charles Dickens through an AI detector the other day. It came back 95.43% AI-generated.

Eduwriter.ai, which claims to be trusted by teachers and students at Cambridge, Stanford, Harvard, and Aston Universities, scored the first three paragraphs from A Christmas Carol, written by Charles Dickens in 1843, as 95% AI-generated.

Four other detectors gave identical results:

- ZeroGPT

- NoteGPT

- Justdone

- Ai.detectorwriter

They're likely all using the same underlying engine, which means multiple companies are selling the same broken technology under different brands. Scispace went even further, confidently reporting the text was 100% AI.

Charles Dickens. Dead since 1870. Wrote with a quill pen. Apparently a robot.

If that doesn't tell you everything you need to know about AI detectors, nothing will.

The Great AI Panic

We're living through a moral panic about artificial intelligence, and like all moral panics, it's making people stupid. Teachers are convinced their students are cheating. Editors are running every submission through detection software. Reddit moderators are banning people for "AI-generated content" based on nothing but a dodgy algorithm's best guess.

And the tool they're all using to catch the cheaters? AI detectors. Which, as it turns out, are about as reliable as a chocolate teapot.

I know this because I've been testing them. Not because I'm trying to cheat, I'm 70 years old, I've got nothing to prove to anyone, but because I wanted to see what all the fuss was about.

My Experiment

I wrote an article. A proper one, from scratch, about a subject I know well. I edited it, tightened it up, made sure it said what I wanted it to say. Then I ran it through thirteen different AI detectors.

The results? Utter chaos:

- Quilbot: 0% AI

- Copyleaks: 0% AI

- Winston: 1% AI

- Scispace: 2% AI

- Grammarly: 8% AI

- Youscan: 25% AI

- Decopy: 29% AI

- NoteGPT: 47.26% AI

- ZeroGPT: 47.26% AI

- Undetectable: 50% AI

- Originality: 56% original (44% AI)

- Detecting AI: 61.4% AI

- GPTZero: 80% AI

Thirteen detectors. Same article. Thirteen wildly different results — from 0% AI to 80% AI. Statistically speaking, that’s less consistency than a weather forecast written by a Labrador.

Then I tried the Dickens passage. Five detectors scored it 95.43% AI. One said 100% AI. Several others, in fairness, correctly identified it as human.

But if Charles Dickens can't reliably pass an AI detector, what chance does a university student have?

What Are These Things Actually Detecting?

AI detectors claim to spot patterns that large language models use: certain phrases, sentence structures, transitions, and rhythms that supposedly give away non-human writing.

The problem? Those same patterns exist in good human writing. They always have.

Here are some phrases AI detectors flag as "suspicious":

- "Let's be clear"

- "It's important to note"

- "Furthermore"

- "In conclusion"

- "Delve into"

Standard English. Transition phrases people have used for centuries. But because ChatGPT also uses them, the detectors call them evidence of AI.

If you wrote an essay using any of these phrases and your teacher ran it through a detector, you'd be flagged as a cheat. Not because you cheated, but because you wrote clearly.

The Real Problem: Good Writing Looks Like Good Writing

Here's the uncomfortable truth nobody wants to admit: well-structured prose looks the same whether it's written by a human or generated by AI.

Why? Because AI is trained on human writing. Good human writing. It learns from Dickens, Orwell, Hemingway, journalism, textbooks, and millions of articles written by competent professionals.

When AI generates text, it mimics the patterns it learned from us. And when we write well, with clarity, structure, and proper grammar, we use those same patterns.

So how is a detector supposed to tell the difference? It can't. Not reliably. That's why the same text gets thirteen wildly different scores.

The Protection Racket

Here's where it gets really interesting. Most AI detectors offer their detection service for free. Very generous of them. But they also offer a "humanizer" function, a paid service that will rewrite your text to pass their detector.

Monthly subscriptions. Ten, twenty quid a month for 10,000 words or so.

Let me get this straight: they've built a detector that falsely flags human writing as AI, terrified you into thinking you'll be accused of cheating, then charged you to "fix" the problem they created?

That's not a service. That's a protection racket.

"Nice essay you've got there. Shame if someone thought it was written by a robot. Pay us £20 a month and we'll make sure that doesn't happen."

Digital extortion. Create the fear, sell the solution, profit on both ends.

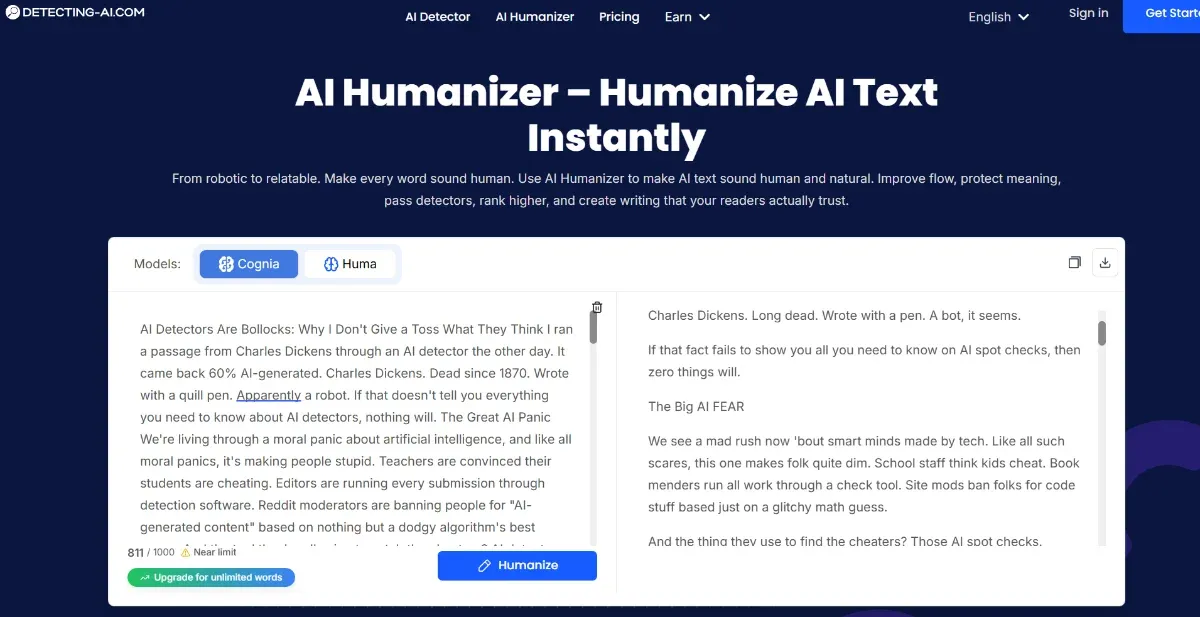

A screenshot of "humanized" text from this article. It turned well-written prose into gibberish.

What Does a "Humanizer" Actually Do?

Curious about what I'd be paying for, I ran an early draft of this article through one of these "humanizer" services.

Here's what it produced:

"I took a bit of text from old Charles Dickens. I put it in a thing that says if AI made it. It said sixty out of one hundred parts were from a bot. Charles Dickens. Long dead. Wrote with a pen. A bot, it seems. If that fact fails to show you all you need to know on AI spot checks, then zero things will."

Read that again. That's what they're charging £20 a month to produce.

They've taken clear, readable prose and turned it into word salad. "AI spot checks" instead of "AI detectors." "A thing that says if AI made it" instead of "an AI detector." "Sixty out of one hundred parts" instead of "60%." "Zero things will" instead of "nothing will."

It reads like it was written by someone who learned English last week from a dodgy phrasebook.

Here's another gem:

"We see a mad rush now 'bout smart minds made by tech. Like all such scares, this one makes folk quite dim."

That was supposed to be: "We're living through a moral panic about artificial intelligence, and like all moral panics, it's making people stupid."

And the absolute masterpiece:

"Those AI spot checks. Which turn out to be as good as a wax cup for hot tea."

Originally: "AI detectors, which turn out to be about as reliable as a chocolate teapot."

A wax cup for hot tea. Brilliant. That'll fool everyone into thinking a human wrote it.

The Kicker

Here's the truly insane part: this mangled nonsense would probably score lower on their AI detector than my original, clearly written text.

Because that's the scam. They've programmed their detector to flag clear, competent writing and approve incomprehensible garbage.

You're not paying to make your writing more human. You're paying to make it worse. Deliberately worse. So bad that even their own algorithm can't make sense of it.

And they're charging you for the privilege.

Why This Matters

Universities are using these detectors to accuse students of cheating. Editors are rejecting submissions. Online platforms are banning users. People's reputations and livelihoods are being damaged by software that can't tell Charles Dickens from ChatGPT.

And here's what really gets me: the detectors punish good writing.

Write clearly, with proper structure and transitions? Flagged. Write in a disjointed, awkward, unnatural style, like the "humanized" gibberish above? You pass.

The software is literally encouraging people to write worse to avoid detection.

That's not protecting academic integrity. That's vandalism. Profitable vandalism, because they're selling the vandalization service.

"But You Use AI, Don't You?"

Yes. I do. I use it for editing, much the same way I once used Grammarly or, back in the day, a paper dictionary and thesaurus when I worked for a living.

I write everything myself, the ideas, the research, the arguments, the voice. Then I use AI to suggest tighter phrasing, catch errors, spot repetition, and improve flow. It's a tool, like spell-check or a thesaurus, just more sophisticated.

Editors have done this work for centuries. Now AI does it faster. That doesn't make the writing any less mine.

The difference between using AI as a tool and having AI write for you is obvious to anyone actually reading the work. My articles have a consistent voice, personality, and perspective. They reference my specific experiences and knowledge. They're clearly written by a person, not generated by a prompt.

I'd love to meet the AI that could come up with the story about me at seven years old trying to roast baby frogs on Kilvey Hill in Swansea.

But an AI detector doesn't care about any of that. It just scans for patterns and spits out a number, a number designed to scare you into paying for their "solution."

The Dickens Test

Here's my challenge to anyone who believes AI detectors work: run your favourite authors through them.

Try Hemingway. Try Orwell. Try Joan Didion or Hunter S. Thompson. See what scores they get.

I guarantee you'll find that some of the greatest writers in the English language get flagged as robots.

Because these detectors aren't detecting AI. They're detecting clear, competent prose. And they're punishing people for it. Then charging them to make it worse.

The Bottom Line

AI detectors are pseudoscience wrapped in a protection racket. They're unreliable, inconsistent, and fundamentally flawed.

Using them to accuse people of cheating is like using a Ouija board to diagnose cancer. Paying for their "humanizer" service is like paying someone to smash your kneecaps with a hammer so you can't be accused of running too fast.

If you want to know whether something was written by a human, read it. Does it have a voice? Does it reference specific, verifiable knowledge? Does it have personality, quirks, inconsistencies? Does it sound like a person?

That's your detector. Your brain. Use it.

And if some algorithm tells you Charles Dickens is 95% AI, or that "a wax cup for hot tea" is better writing than "a chocolate teapot," maybe, just maybe, the problem isn't with Dickens or with you.

It's with the bloody algorithm. And the grifters making money from it.

So if you’re wondering whether this article was written by AI, I’ll save you the detector fee. No, it was written by a grumpy old Welshman with a low tolerance for bullshit.